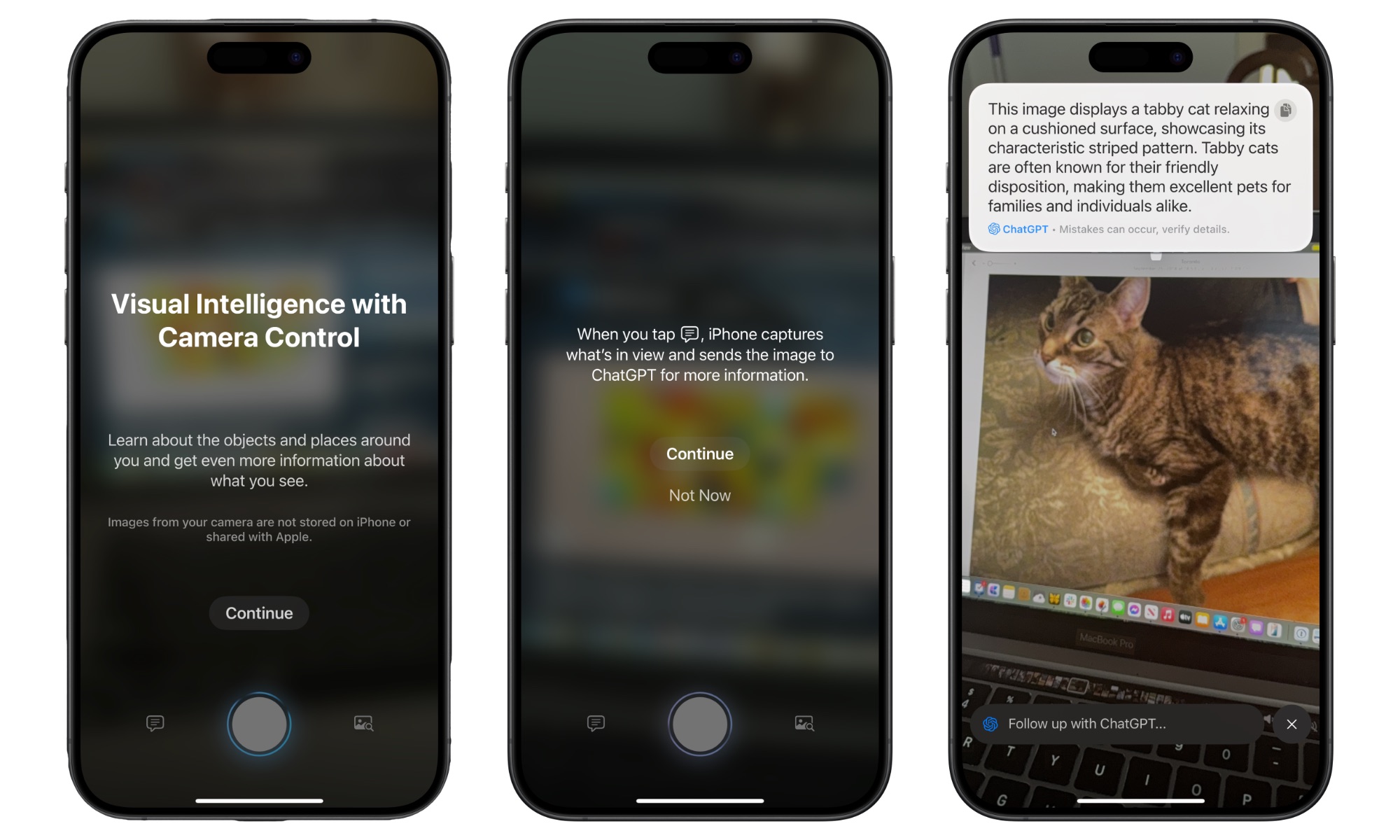

Start Using Visual Intelligence

Visual intelligence is a relatively new feature of Apple Intelligence. With it, you can use your iPhone’s camera to get more information about an object, animal, plant, or even a business. To do this, your iPhone will use Apple Intelligence, ChatGPT, and even Google to give you the best, most accurate answer.

Visual intelligence is currently only available on the iPhone 16 lineup, including the iPhone 16e. However, it’s coming to the iPhone 15 Pro and iPhone 15 Pro Max in iOS 18.4, which should launch next week.

If you have an iPhone 16, iPhone 16 Plus, iPhone 16 Pro, or iPhone 16 Pro Max, all you need to do to start using visual intelligence is to press and hold the Camera Control on the bottom right of your iPhone.

The iPhone 16e can also use visual intelligence, but since it doesn’t come with a Camera Control button, you’ll need to add this feature to your Control Center or use the Action button. We’ll show you how to do both in a moment. You’ll also need to do this on the iPhone 15 Pro models.

Next, point your camera at the object you want to get more information on and use one of the three buttons at the bottom. The Ask button on the left will use ChatGPT to give you more personalized information.

The Search button on the right side will search the web for more information about the object you are pointing to. The button in the middle lets you take a temporary picture of the object, so you can use the other buttons we just mentioned.

Overall, this feature works really well. You can quickly translate any text you want, get more information about a business, or learn more about animals or plants. That said, it’s still far from perfect. ChatGPT still has some trouble identifying an object, so try taking what visual intelligence tells you with a grain of salt.