The First Beta of iOS 15.2 Has Already Landed | Here’s What’s New

Credit: Jesse Hollington

Credit: Jesse Hollington

As usual, Apple isn’t wasting any time on pushing forward with the next major iOS 15 point release. A mere two days after the public release of iOS 15.1, Apple has already pushed out the first beta of iOS 15.2 to registered developers, and a public beta is likely coming any moment now.

iOS 15.2 doesn’t promise to be quite as groundbreaking as iOS 15.1, which brought the promised SharePlay feature, along with support for COVID-19 vaccination cards in Apple Wallet and some nice enhancements for iPhone 13 Pro users.

That said, there’s still some unfinished business with iOS 15, and this upcoming point release promises to deliver on at least one other promised feature, the new App Privacy Report, along with a few other polishes and tweaks. Read on for four new things we can expect to see in iOS 15.2.

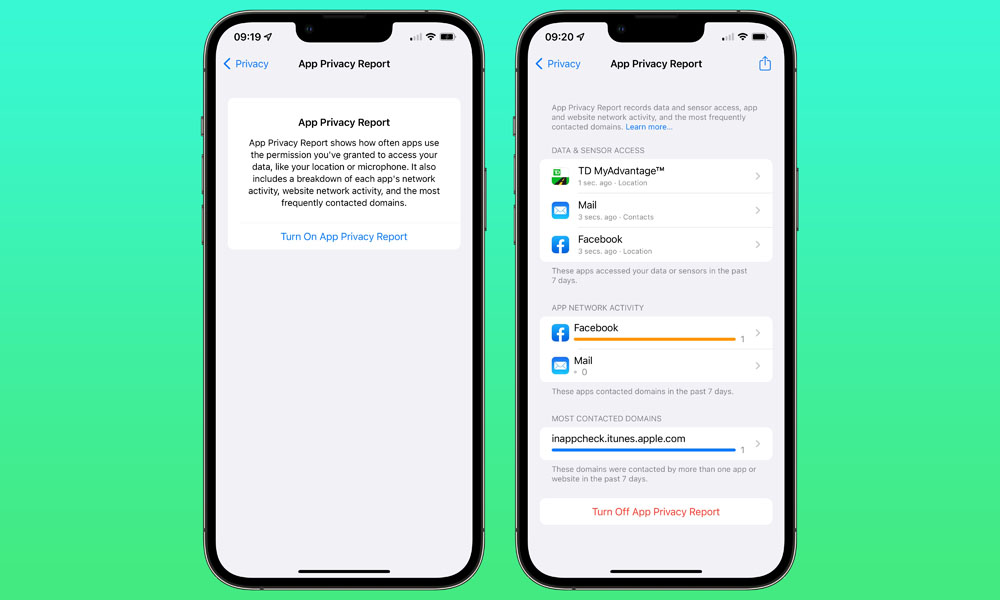

App Privacy Report

This is the next logical step beyond the App Privacy Labels that Apple introduced to the App Store last year, promising to actively monitor what data your apps are accessing, rather than simply trusting what developers are saying about their own apps.

App Privacy Labels, which were somewhat like the nutrition labels you see on food, were intended to show you what kind of data an app would access before you downloaded it. The problem is that this was based entirely on the honor system.

Apple required developers to complete a comprehensive questionnaire to outline what kind of data their apps ask users for, and what else they access on your iPhone, iPad, or Mac. However, as far as we know, Apple did nothing to verify these reports.

Likewise, even though Apple did require developers to submit this information before updating their apps, it didn’t enforce it until that happened, and at least one big developer — Google — avoided the issue for weeks by simply failing to update its apps. In retrospect, it was easy to see why.

Apple’s new App Privacy Report will take this beyond what developers are willing to share, with features baked into iOS 15 that will actually keep track of what an app is actually doing on your device.

This will include things like tracking how often an app has accessed your location, camera, or microphone, as well as access to sensitive data like photos and contacts. It will also take a page out of Safari’s new Privacy Reports to let you know what trackers may be working away behind your back, reporting information to ad networks and elsewhere.

While some aspects of the feature already showed up in IOS 15 — a “Record App Activity” setting could be found in the Privacy section of the Settings app — it wasn’t entirely functional. Specifically, there was no way to actually see any data, and it’s not even clear if iOS 15 was even recording anything.

With the first beta of iOS 15.2, however, the setting has been more appropriately renamed App Privacy Report, and it would appear that it’s fully functional. Further, even Apple’s own first-party apps aren’t exempt from this, so you’ll know what’s going on whether that activity is coming from the built-in Mail app or the usual suspects like Facebook.

More Emergency SOS Options

The Emergency SOS feature introduced back in iOS 10.2 has been tweaked to offer more consistency and less risk of accidentally triggering “911” calls to Emergency Services.

As of now, Emergency SOS works differently depending on the iPhone model you’re using. On 2017 and later models, starting with the iPhone 8, iPhone 8 Plus, and iPhone X, the sequence is initiated by pressing and holding down the side button and volume buttons for about five seconds.

However, on older models, from the iPhone 7 and prior, the feature is activated by instead rapidly pressing the side or top button five times.

With iOS 15.2, you’ll soon be able to choose which method you want to use, or even enable both, with two new toggle switches that can be found under Settings, Emergency SOS to Call with Hold and Call with 5 Presses.

Apple also notes that either of these methods will now trigger an eight-second countdown before calling Emergency Services, which is an increase from the previous three-second timer. This will give you more time to change your mind should you trigger the feature accidentally.

Notably, at least in this first beta, while the timer starts at 8 seconds, the audible alert siren won’t begin sounding until it reaches the five-second mark.

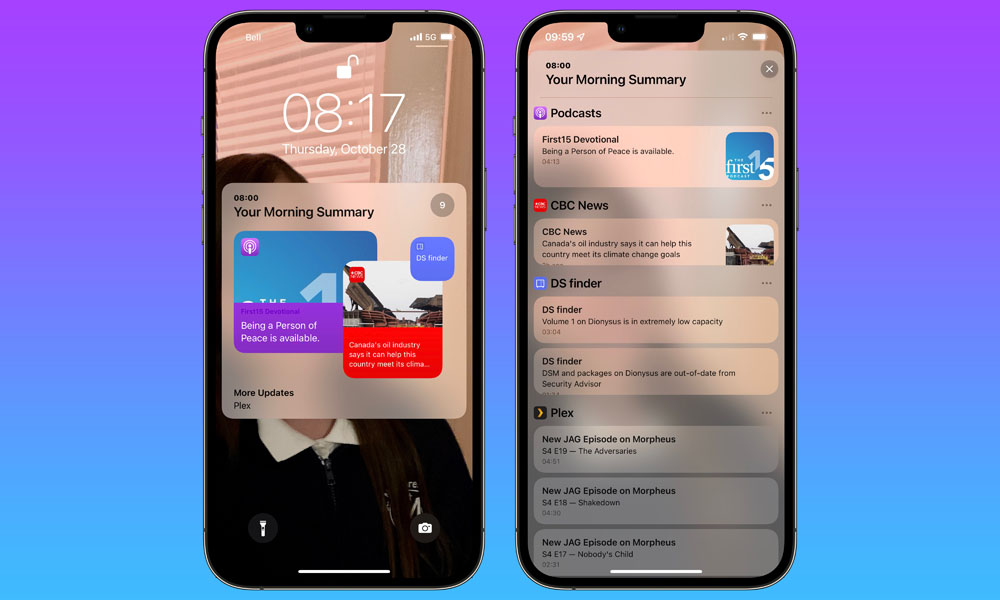

New Notification Summary Layout

The new iOS 15.2 beta also tweaks the design of the Notification Summary window that was added in iOS 15.0.

As part of the new and improved notification system in iOS 15, the summary view groups less critical notifications into “digests” that can then be reviewed at certain times of the day. It’s a feature that’s especially useful when combined with the new Focus Modes to give you a greater level of control over the things that would otherwise distract you.

When it launched in iOS 15.0, the Notification Summary was a fairly bland view that simply highlighted a couple of top notifications, with a button that could be tapped to expand it further.

Apple has dressed this up in iOS 15.2, offering a floating card view that highlights the top two or three notifications in a more widget-like layout. Not only is it a more attractive look, but it helps to more visually set the Notification Summary apart from other notifications that appear on the Lock Screen.

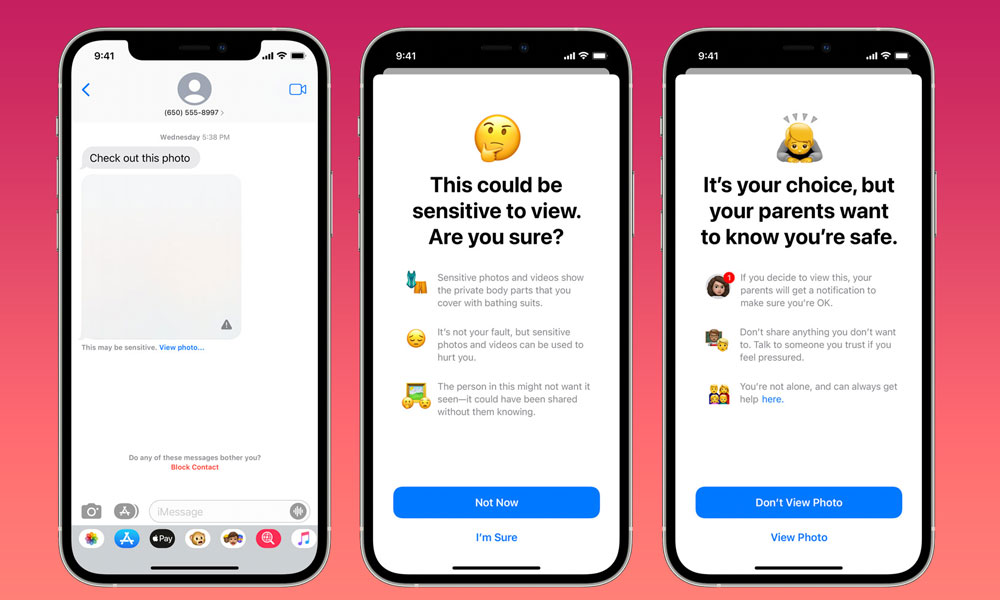

Prepping for Communication Safety

Not surprisingly, the iOS 15.2 beta hides a few things in the code that don’t yet appear to be ready for public view, and according to the folks at MacRumors, this time around we’re seeing evidence that Apple is laying the groundwork for its new Communication Safety feature in Messages.

Announced as part of several new child safety initiates back in August, Apple described the Communication Safety feature as an opt-in service for families to help protect kids from sending and receiving sexually explicit images. The idea was that iOS 15 would use machine learning to identify such photos, blurring them out and warning children that maybe they shouldn’t be looking at them.

The child would still have the option to proceed anyway, but kids under 13 would also be told their parents would be notified if they chose to do so. Apple noted that Communication Safety in Message would only be available to users in an Apple Family Sharing group, would only apply to users under the age of 18, and would have to be explicitly turned on by parents before it would be active at all.

The Communication Safety in Messages announcement was accompanied by another significantly more controversial feature, CSAM Detection, which was designed to scan photos uploaded to iCloud Photo Library against a known database of child sexual abuse materials (CSAM).

By Apple’s own admission, it screwed up by announcing all of these features at the same time, since many failed to understand the differences between CSAM Detection and Communication Safety in Messages. Amidst the negative pushback from privacy advocates and consumers alike, Apple announced it would be delaying its plans to give it time to collect input, rethink things, and make improvements.

While last month’s announcement suggested that the entire set of features was being put on the back burner, code in iOS 15.2 reveals that Apple may be planning to implement the potentially less controversial of the two sooner rather than later. Again, however, this is just foundational code, so we’d be careful about reading too much into it at this point. After all, code for AirTags was found in the first iOS 13 betas two years before they saw the light of day.

Note that there’s also been no evidence found of the much more widely criticized CSAM Detection in the iOS 15.2 code. This suggests that Apple may be taking the wiser approach of rolling out the two features separately, thereby avoiding the confusion from August, when many users conflated the relatively innocuous family scanning of kids’ content in Messages with the more serious law enforcement aspects of the CSAM Detection feature.

Communication Safety was intended solely to help parents know what their children were doing, with absolutely no information leaving the family’s devices, while CSAM Detection was intended to look for known child abuse content within users’ iCloud Photo Libraries. It’s fair to say that Apple is going to have to involve many more stakeholders and do its political homework properly before CSAM Detection makes a return — if it makes a return at all.