5 Unexpected Siri Answers That Shocked Us All

Credit: Image via @CeciMula / Unsplash

Credit: Image via @CeciMula / Unsplash

Image via @CeciMula / Unsplash

Despite criticisms of the digital assistant, Siri is still a pretty useful tool for performing tasks or accessing routine features of an iPhone. Yet, despite that, Siri can give obviously incorrect, humorously wrong, or even offensive answers at times. Continue reading to learn about five examples of Siri giving answers that were completely off. Note: This article contains language some might find distasteful.

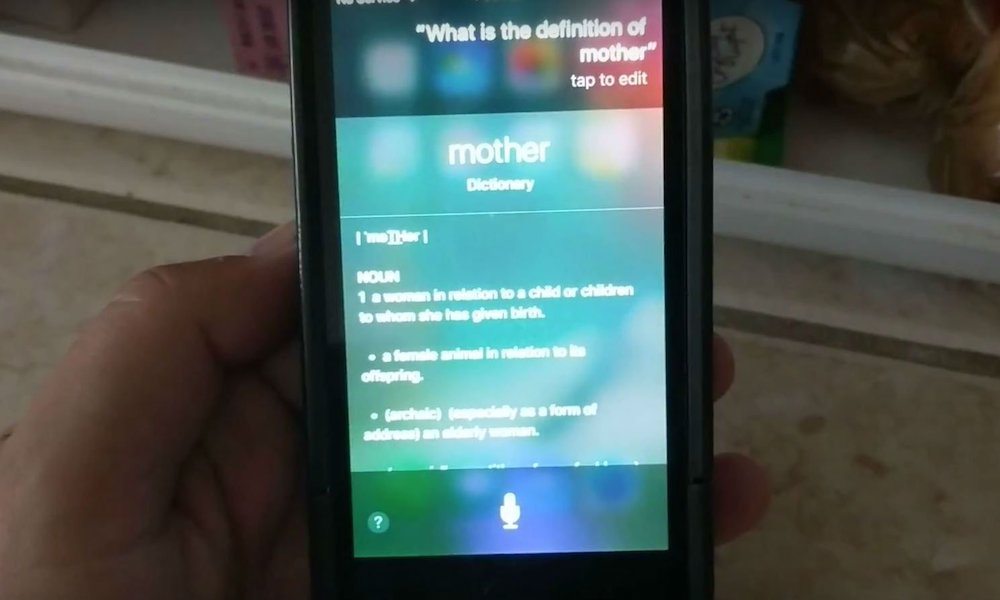

5 Siri Swears

Earlier this week, intrepid Reddit users discovered a way to get Siri to say a pretty explicit word. Basically, when asked for a definition of the word “mother,” Siri would inform users that it’s short for a particular expletive that contains the word “mother” (you can probably guess which one).

To be fair, this only occurred when users ask Siri to give the second definition of the word, something that requires users to actively say “yes.” And Siri appears to be pulling the definition from the Oxford Dictionary. While it’s not necessarily Apple’s fault, it does seem like an oversight — particularly as Siri isn’t known for swearing. Apple seems to have silenced Siri's foul mouth in the days since the incident surfaced, however.

4 The Bulgarian National Anthem

If you were alive in 2017, you know how big of a hit “Despacito” was — it was everywhere. But despite its popularity, the track was never officially adopted by any country as its national anthem. That may sound ridiculous, but Siri seemed to think that was the case last year.

For a while, if asked what the national anthem of Bulgaria was, Siri would reply with “the national anthem of Bulgaria is Despacito.” It still isn’t clear what caused this glitch, but it may have to do with Wikipedia vandalism or Apple's switch to Google search. Luckily, Apple patched it shortly after it went viral.

3 Texting a Crush (Accidentally)

Siri’s goofs aren’t always just mistaken answers — at times, they can lead to embarrassing consequences. At least, that was the case when one woman was humorously talking to the digital assistant (something we’ve all probably done at least once).

Apparently, the woman asked Siri whether her crush would ever text her. In response, Siri accidentally sent “will you ever text me” to that person. Of course, since Siri asks users to confirm before sending any texts, this is more of a case of human error than anything else. But it’s still basically a nightmare for anyone who triple-checks their texts to make sure they're headed to the right person.

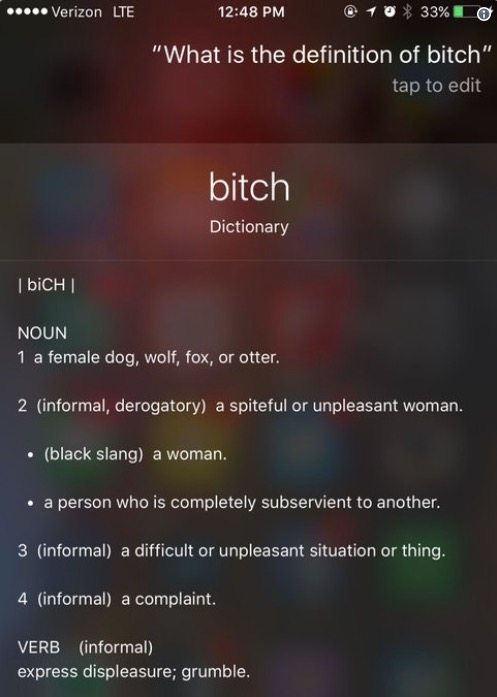

2 An Offensive Definition

Normally, Siri’s wrong answers are funny — and at worst, slightly annoying. But at one point in 2015, the digital assistant included a definition that was offensive and quickly called out by activists. When asked to define “bitch,” Siri would give the correct biological definition of a female canine.

But the results also included a second definition that said the word was “black slang” for a woman. This didn’t go over well, and rightfully so. Again, it isn’t entirely Apple’s fault: Siri appears to have pulled the definition from the Oxford Dictionary. But other sources did tag the word with an explicit “offensive” label, so the goof arguably should have been caught earlier.

1 Siri Didn’t Understand Women’s Basketball

Even if you aren’t a sports person, you probably expect Siri to know a few things about basketball. But the digital assistant apparently missed a massive change that resulted in a small but pretty funny mistake throughout 2017.

First, some context: back in 2015, the NCAA women’s basketball league switched from using two halves to four quarters in games. But Siri missed that switch. As a result, when asked about recent scores, the digital assistant would claim that every game had gone into double overtime.